[ad_1]

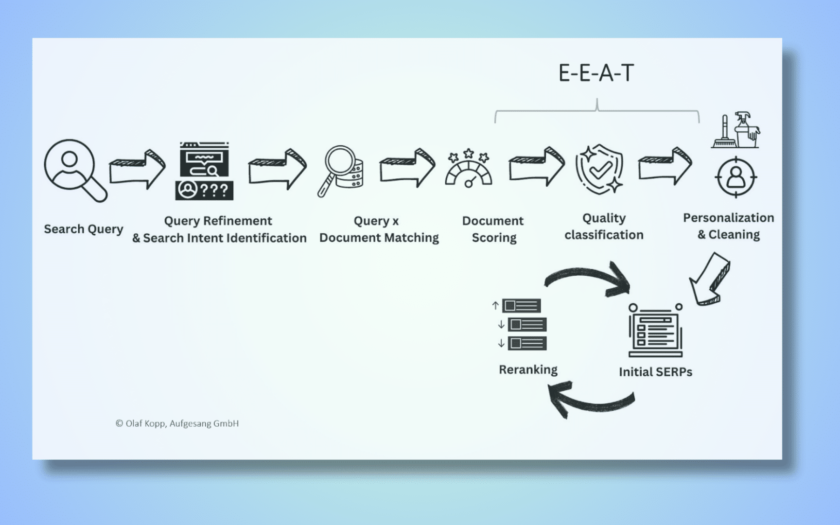

For years, SEO practitioners have wrestled with the puzzling concept of E-E-A-T – experience, expertise, authoritativeness, and trustworthiness – often simplified to vague advice like “build your brand.”

However, behind this public-facing narrative is a sophisticated web of signals that Google evaluates to determine quality, trust, and authority.

Based on over eight years of research into 40+ Google patents and official sources, I’ve identified more than 80 actionable signals that reveal how E-E-A-T works across document, domain, and entity levels.

It’s time to unpack these insights and understand how Google’s algorithms weave relevance, pertinence, and quality into search rankings.

The big misunderstanding about E-E-A-T

Many SEOs mistakenly believe that E-E-A-T has little impact on rankings and dismiss it as a buzzword.

However, Google often uses terms like “helpful content” or “E-E-A-T” as public-facing narratives to frame its search product positively.

These labels encompass numerous independent signals and algorithms working behind the scenes.

To implement E-E-A-T, Google identifies and measures various signals, building a framework that algorithmically promotes trustworthy resources in search results and scales quality evaluations.

This process could also influence the selection of resources for training large language models (LLMs), highlighting the importance of understanding and optimizing for E-E-A-T.

Notably, E-E-A-T is never explicitly mentioned in Google patents, API leaks, or DOJ documents.

Instead, my research focuses on sources that address quality, trust, authority, and expertise – concepts essential to grasping E-E-A-T’s role.

Relevance, pertinence and quality in search engines

Before exploring the researched E-E-A-T signals, it’s important to understand the distinctions between relevance, pertinence, and quality in information retrieval.

Relevance

This refers to the objective relationship between a search query and the corresponding content. Search engines like Google determine this through advanced text analysis.

Ranking factors for relevance evaluate how well a document aligns with search intent, including elements such as:

Keyword usage in headlines, content, and page titles.

TF-IDF and BM25 scoring.

Internal/external linking and anchor texts.

Search intent match.

User signals through systems like DeepRank and RankEmbed BERT.

Passage-based indexing.

Information gain scores.

Pertinence

This concept introduces the human element into search results, representing the subjective value of content to individual users.

It recognizes that users searching for the same term may find different levels of usefulness in the same content. For example:

An SEO professional searching for “search engine optimization” expects advanced technical content.

A beginner searching for the same term needs foundational knowledge and basic concepts.

A business owner might seek practical implementation strategies.

Relevance and pertinence are primarily managed by Google’s Ascorer/Muppet system for initial ranking and Superroot/Twiddler for ongoing reranking.

Relevance is assessed through Scoring, which assigns numeric values to quantify specific properties of input data.

Quality

In search engines, quality operates on multiple levels, serving as an evaluation metric for entities, publishers, authors, domains, and documents.

It is assessed by systems like Coati (formerly Panda) or the Helpful Content System, which evaluate quality at the site, domain, and entity levels.

Google considers quality one of three main pillars, alongside relevance and pertinence, in determining search results.

Unlike relevance (objective and query-dependent) or pertinence (subjective and user-dependent), quality is a broader evaluation that examines content comprehensively.

For instance, quality assessments consider:

How well a site’s content fulfills its purpose.

The expertise demonstrated across multiple pieces of content.

The overall user experience of the website.

Quality assessment incorporates signals related to E-E-A-T and page experience, functioning as quality classifiers. These classifiers, trained on a mix of signals, help evaluate content quality.

A classifier is a machine learning model or algorithm that predicts categorical labels. It assigns one of a predefined set of classes to each input instance based on its features.

The three levels of E-E-A-T evaluation

Google’s ranking system has evolved into a multi-dimensional framework that evaluates content at three levels: document, domain, and source entity, with increasing emphasis on E-E-A-T principles.

Document level: Evaluates the quality of individual pieces of content.

Site or domain level: Considers domain-wide quality factors that influence an entire website or specific areas within it.

Source entity level: Assesses the originators or publishers of content (such as authors and organizations) based on E-E-A-T criteria.

The term “source entity” refers to these originators, a concept aligned with Google’s entity-based search introduced through the Hummingbird update.

This update enabled Google to apply E-E-A-T principles to these entities, further refining content evaluation.

Get the newsletter search marketers rely on.

Potential signals for E-E-A-T quality assessment: An overview

In 2022, I covered 14 ways Google may evaluate E-A-T here on Search Engine Land.

Over two years later, the framework expanded to include new dimensions of E-E-A-T, reflecting Google’s ongoing refinement of quality assessment.

These updates emphasize expertise, authoritativeness, trustworthiness, and the critical role of first-hand experience in content evaluation.

In this section, I won’t delve into each of the 80+ signals I’ve identified. Instead, I’ll provide a summarized overview. (For a detailed breakdown, refer to the infographic below.)

Document-level signals

Content originality and comprehensiveness

A high ratio of unique content that thoroughly covers topics, satisfies various user intents, and is regularly updated.

Long-form, in-depth content showcasing expertise is particularly valued.

Grammar and professional presentation

Clean, error-free content with proper formatting and structure.

Signals professionalism and subject matter expertise.

Quality of external references and citations

Includes high-quality outbound links to authoritative sources.

Proper citation practices with a clean link profile that demonstrates field knowledge.

Entity relationships and topical expertise indicators

Demonstrates connections between relevant entities, consistent co-occurrence patterns of related concepts, and proper entity usage to indicate expertise.

User engagement metrics

Metrics such as click-through rates (CTR), dwell time, and direct URL visits reflect the value and authority of content over time.

Search term relevance

Matches user intent and effectively covers primary and related queries.

Uses appropriate vocabulary and concepts relevant to the topic.

Domain-level signals

Trustworthiness

Business verification

Consistent business information (addresses, phone numbers, domain names) to establish legitimacy.

Link profile quality

Diverse, high-quality backlinks from reputable sources and clean outbound link profiles to authoritative sites, and proximity to trusted seed sites in link graphs.

The relevance of anchor text and contextual linking also plays a role.

Security measures

Using HTTPS protocols, minimizing the need for inference through transparent information, and authenticating contributors with verified personal information and credentials.

Brand consistency

Matching information across links, titles, and content.

Consistent business details and brand recognition through direct queries referencing the site or brand.

Long-term consistency in rankings and site performance also contributes.

Long-term user engagements

Metrics like click-through rates (CTR), dwell time across the domain, direct URL inputs, and consistent selection of particular results for queries over time.

Applies both at the document level and sitewide.

Authoritativeness

Link diversity and PageRank

High-quality, varied backlinks from reputable sources establish domain authority.

PageRank measures the importance of pages based on the quantity and quality of links pointing to them.

Historical performance

Sustained ranking performance and user engagement metrics over time.

Content network strength

Interlinked, relevant content strengthens authority across the website.

Evaluated based on how well related pages are interconnected and support each other topically.

Topical focus

Demonstrates expertise in specific subject areas through comprehensive, original coverage of specific subject areas using relevant vocabulary and entities.

Expertise and experience

Content freshness

Refers to how frequently content is updated and maintained to stay current and relevant.

Regularly updated content is typically viewed more favorably.

Includes both creating new content and updating existing materials.

Category relevance

Involves how well content aligns with and performs within specific topical categories.

Strong performance in relevant content categories signals expertise and authority in those areas.

Sites can demonstrate expertise in both broad and niche subject areas.

Topic coverage depth

Examines how comprehensively content covers a subject matter, including both breadth and detail.

Includes satisfying both informational and navigational user intents, incorporating relevant terminology and entities, and providing thorough explanations rather than surface-level coverage.

User behavior patterns

Reflect how people interact with content over time, including metrics like click-through rates, dwell time, and navigation patterns.

For example, users transitioning from informational to navigational queries for a site can signal their expertise and authority in that topic area.

Source entity-level signals

Trustworthiness

Contributor verification

Authenticating content creators’ personal information and identities across platforms to establish credibility.

Verifying professional credentials and educational background.

Maintaining consistent identity information.

Reputation tracking

Monitors an entity’s history of providing accurate information, sentiment of mentions/ratings, and overall credibility score based on their track record.

Also includes tracking the quality and consistency of their content contributions over time.

Peer endorsements

Refer to reviews, recommendations and recognition from other reputable authors and experts in the field.

Can include awards, citations of their work, and positive references from authoritative sources.

Publication history

Examines the volume, frequency and quality of content an entity has produced, including factors like originality, comprehensiveness, and sustained contributions in their area of expertise.

Regular publishing of high-quality content improves reputation scores.

Professional credentials

Encompass verified qualifications like educational background, work experience, certifications, and demonstrated expertise in specific subject areas.

Helps establish subject matter authority and relevance to the topics being covered.

Authoritativeness

Citations and references

Involve linking to authoritative sources, using relevant anchor text, and maintaining clean link profiles to demonstrate expertise and trustworthiness.

The content shows good citation practices by referencing Google patents, research papers, and authoritative industry sources.

Recognition and awards

Tracked through “prize metrics” that signal authority and expertise, including professional accolades, industry recognition, and critical reviews contributing to an entity’s reputation score.

For instance, the author demonstrates this through mentions of being a top contributor for Search Engine Land and speaking at major industry conferences.

Brand presence

Evaluated through consistent business information, domain reputation, brand recognition in queries, and presence across authoritative platforms.

Includes verified business details, matching domain names, and established online presence.

For example, the author has a strong brand presence through social media profiles, speaking engagements, and industry publications.

Platform consistency

Consistent identity, information, and quality across different platforms and channels.

Includes matching business information, coherent branding, and unified messaging across websites, social media, and other online presence.

Consistent author profiles and branding across multiple platforms.

Expertise and experience

Subject matter alignment

Refers to how well an author’s expertise matches their content topic.

The content creator must have relevant knowledge and experience in the field they’re writing about.

Publication consistency

Examines the volume and regularity of content contributions over time.

Includes factors like frequency of updates, long-term publishing history, and maintaining content freshness.

Original contributions

Creating unique, first-instance content rather than copied material.

Include pioneering content on specific topics, comprehensive topic coverage, and adding new value through research, analysis, or insights.

Contributing authors are invited to create content for Search Engine Land and are chosen for their expertise and contribution to the search community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

[ad_2]